*********************************************************

*** ***

*** There's an updated version for AVI 30.X here ***

*** ***

*********************************************************

NSX Advanced Load Balancer is indisputably the best load balancing solution for Supervisor Cluster when enabling it on a vSphere Distributed Switch configuration, proving a lot of enterprise grade features, like high availability, auto scale, metrics and more, specially as it's included on every Tanzu edition, so there's no question about what load balacing solution to use with your kubernetes plataform.

Let's go through a step by step journey on how to install and configure it for vSphere with Tanzu.

Hold on to your hat it will be a little bit longer post than what I'm used to produce.

My Lab has the following scenario, 3 fully routable network segments with no DHCP enable as follow:

- Management Network: where all my management components are placed, vCenter, NSX, ESXi and now AVI Controller and Service Engines;

- Service Network: where the Kubernetes Services (Load Balancer) will be allocated to;

- Workload Network: where the Tanzu Kubernetes Cluster Nodes will be placed;

Every environment is unique, so it's imperative you take sometime to go through the topologies and requirements before standing up your own solution.

First thing first, NSX Advanced Load Balancer OVA deployment, also known as AVI Controller; it's the central control plane of the solution, responsable for creation, configuration, management of Service Engines and services that are created on demand by developers.

Deploying an OVA is a pretty straightforward operation

that you probably have done one thousand times during the years, so I'll skip it.

Once it's done, just power it on and wait a few minutes to the startup process finishes the configuration (it might take around 10 minutes, depending on each environment).

Just open up a browser and hit the IP address you just specified during OVA deployment.

- Create an admin account and set the password;

- On System Settings section create a Passphare, it's used when backing up and restoring the controller, also setup your DNS and DNS domain,SMTP information, which I skip because I don’t have it on my environment.

- On Multi-Tenant section keep the default, and click SAVE;

Now that the system is ready to go, let’s start configuring the secure access to the controller replacing the self-signed certificates generated during deployment.

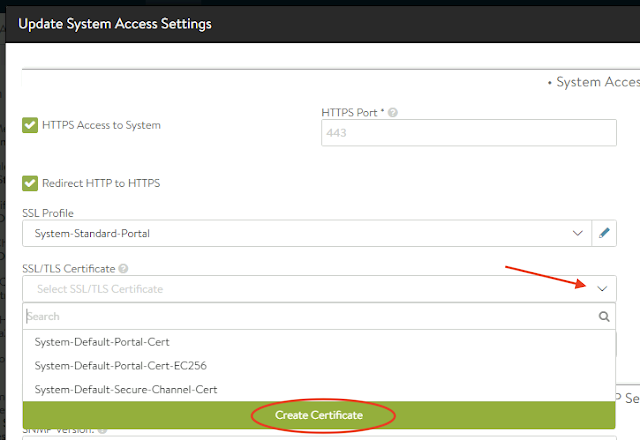

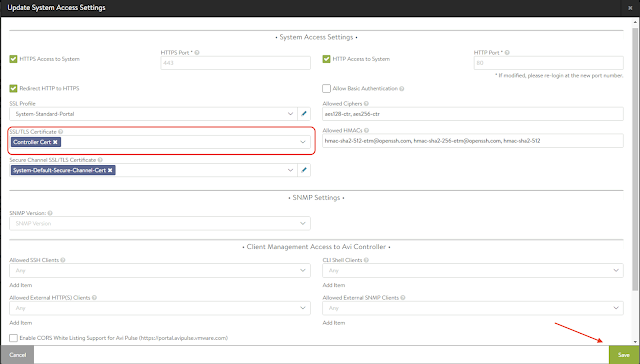

- On the main menu select Administration, Settings tab and then Access Settings;

Click on the Edit button on your far right;

- Create a new on, click on the arrow and select Create Certificate;

I’ll create a self-signed certificate, but I could generate a CSR and import certificates as well.

- Give it a meaningful name and fill the fields as you would to create any certificate.

For common name I used my controller's FQDP an it's IP address as SAN, click SAVE;

The new certificate should appear on the SSL/TLS Certificate field, click SAVE;

After chancing the certificates you will need to login again.

- Navigate to DNS/NTP, click on the Edit button on your far right;

- There you can adjust your DNS settings but equally important is to configure your NTP settings, removing or adding new entries, just click SAVE when you are done.

It's time to configure your endpoint, the source resource holding your workloads.

- On the main menu select Infrastructure, Clouds tab;

There’s a default cloud already created, but as you can see, it has None as type, indicating there’s no configuration so far, click the Edit button;

- Select VMware vCenter and click Next;

- Type the Username and password for the user with the required privileged on vCenter, select Write if you wish the Controller to provision the Service Engines… and trust me YOU DO… and lastly the vCenter name, click NEXT;

- Select the Data Center where the Service Engines will be provisioned

Enable Prefer Static Routes vs Directly Connected Network and click Next;

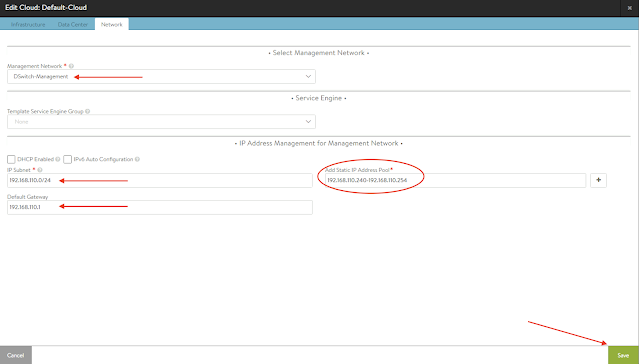

- For the Management Network, select the Port Group designed for the management traffic, fill out the information about the subnet and add a range of IP address , click SAVE;

When creating Service Engines on-demand, those IPs will be the ones assigned to them.

If everything is fine, now you should have VMware vCenter as type and a green light next to it.

Service Engines are grouped together providing a concise configuration and easier/faster management. Service Engine Group rules how the service engines are placed, their configuration and quantity.

- On Service Engine Group tab, there’s an already created default group, just click on the Edit button next to it;

- There you can

configure several aspects of the Service Engine. which is not part of this tutorial so just stick with default options.

- Select your High Availabilty Mode depending on your license type;

Essential license only allows you to Legacy HA mode.

Enterprise license allows you to select Elastic HA in addition to Legacy HA.

- Click on Advanced tab;

Configure a name prefix and a folder to organize the Service Engine VMs, last piece is to configure the Cluster where the SE VMs will be created, click SAVE;

When creating Kubernetes Services, a free IP will be pulled from the IP Pool and allocated to the service being provisioning.

- Click on Network tab;

You can see all Port Groups discovered on the vCenter you just assigned on the Cloud section.

- Click on the Edit button of the Port Group providing the Kubernetes Service or VIPs if you will.

- On the discovered subnet click on the Edit button;

- Click on Add Static IP Address Pool

Make sure the IP Range is now shown and click SAVE;

Since I'm using a fully routable network I need to specify how the Services network reach my Workload network, where the K8s nodes are placed, It's done by creating a static route.

- On the Routing tab, click CREATE;

- Fill the fields with the Workload subnet information and the gateway on the Service Network, click SAVE;

Also, in order to configure the Virtual Services properly we need to provide some profile information.

- On the main menu select Templates, Profiles tab and then IPAM/DNS Profiles,

click on Create;

- Select IPAM Profile

- Give it a name, select Allocate IP in VRF and click +Add Usable Network;

- Select the Cloud endpoint where your vCenter is configured and the Port Group designated for the Service, click SAVE;

- Back on Profiles page click on Create again and select DNS Profile this time;

- Give it a name and click on +Add DNS Service Domain;

- Just add your domain name and click SAVE;

- Back on Infrastructure menu and then Clouds tab, click on the Edit button for your vCenter Cloud endpoint.

- Make sure to configure the IPAM and DNS Profiles we just created , click SAVE;

If you got this far, THANKS A LOT, and now your system is ready to enable vSphere with Tanzu

with NSX Advanced Load Balanced.

If you are not sure how to enable it, just check my post on how to do it.